The productivity paradox of AI coding assistants

Our development team at Cerbos is split into two camps. One side uses AI coding help like Cursor or Claude Code and sees it as the fastest way to ship. The other side has the typical “meh” reaction you often see on Reddit, arguing that AI assistance is mostly a racket.

Some of us lean on AI coding to push side projects faster into the delivery pipeline. These are not core product features but experiments and MVP-style initiatives. For bringing that kind of work to its first version, the speed-up is real.

AI coding assistants promise less boilerplate, fewer doc lookups, and quicker iteration. From my perspective, they deliver on that promise when building MVPs, automations, and hobby projects. Outside of those use cases, the picture changes. You may feel like you are moving quickly, but getting code production ready often takes longer.

Let’s dig into the data.

- Dopamine vs. reality

- The quality problem

- So where is the magical 10x productivity boost?

- Security is where the gap shows most clearly

- How AI assistants create new attack surfaces

- The 70% problem

- Business opinion vs. developer opinion

Dopamine vs. reality

AI coding assistants feel productive because they give instant feedback. You type a prompt and code drops in right away. That loop feels like progress, the same reward you get from closing a ticket or fixing a failing test. The problem is that dopamine rewards activity in the editor, not working code in production.

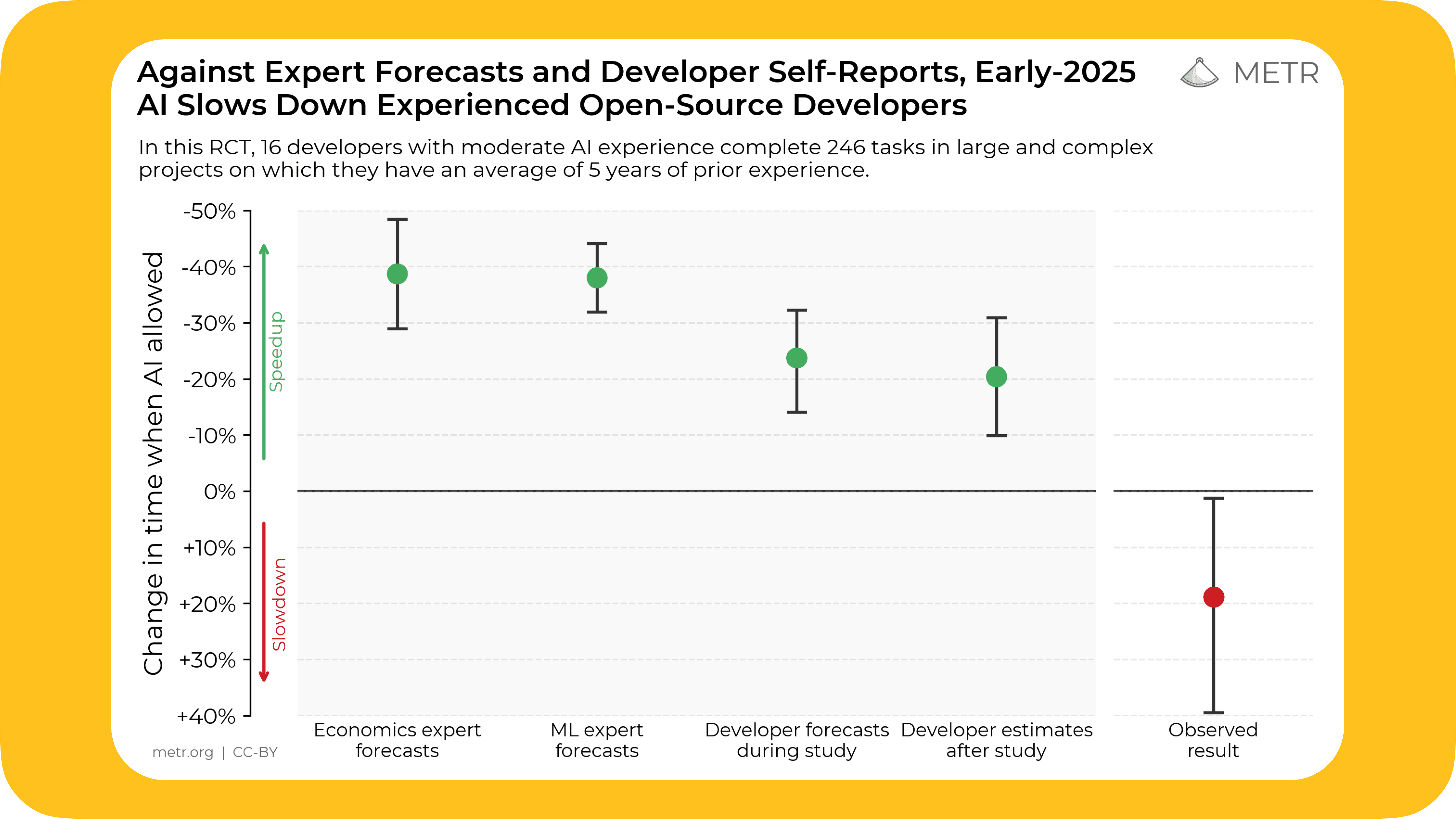

The METR randomized trial in July 2025 with experienced open source developers showed how strong this illusion is. Half the group had AI tools, the other half coded without them. Participants mainly used Cursor Pro with Claude 3.5 and 3.7 Sonnet, which we use internally as well. Developers using AI were on average 19% slower. Yet they were convinced they had been faster.

- Before starting, they predicted AI would make them 24% faster.

- After finishing, even with slower results, they still believed AI had sped them up by ~20%.

The chart below from the study makes the point clearly. The green dots show developer expectations and self-reports, while the red dots show actual performance. AI coding “felt faster”, but in reality, it slowed experienced developers down.

Marcus Hutchins described this perfectly in his essay:

“LLMs inherently hijack the human brain’s reward system… LLMs give the same feeling of achievement one would get from doing the work themselves, but without any of the heavy lifting.”

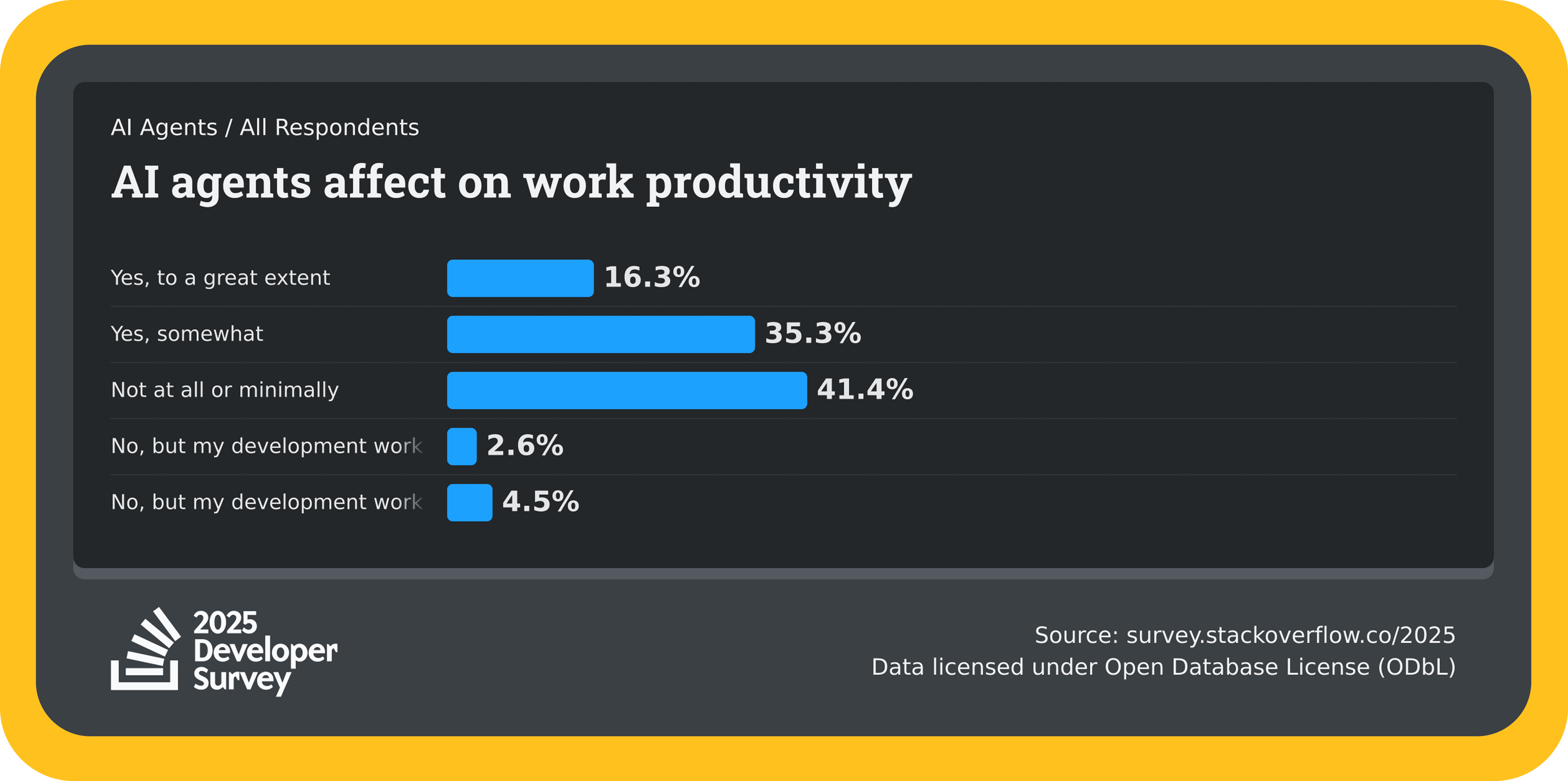

That gap between perception and reality is the productivity placebo. It also shows up in the 2025 Stack Overflow Developer Survey. Only 16.3% of developers said AI made them more productive to a great extent. The largest group, 41.4%, said it had little or no effect. Most developers were in the middle, reporting “somewhat” better output. That lines up with the METR findings. AI feels faster, but the measurable gains are marginal or even negative.

The quality problem

Speed is one thing, but quality is another. Developers who have used AI assistants in long sessions see the same pattern: output quality gets worse the more context you add. The model starts pulling in irrelevant details from earlier prompts, and accuracy drops. This effect is often called context rot.

More context is not always better. In theory, a bigger context window should help, but in practice, it often distracts the model. The result is bloated or off-target code that looks right but does not solve the problem you are working on.

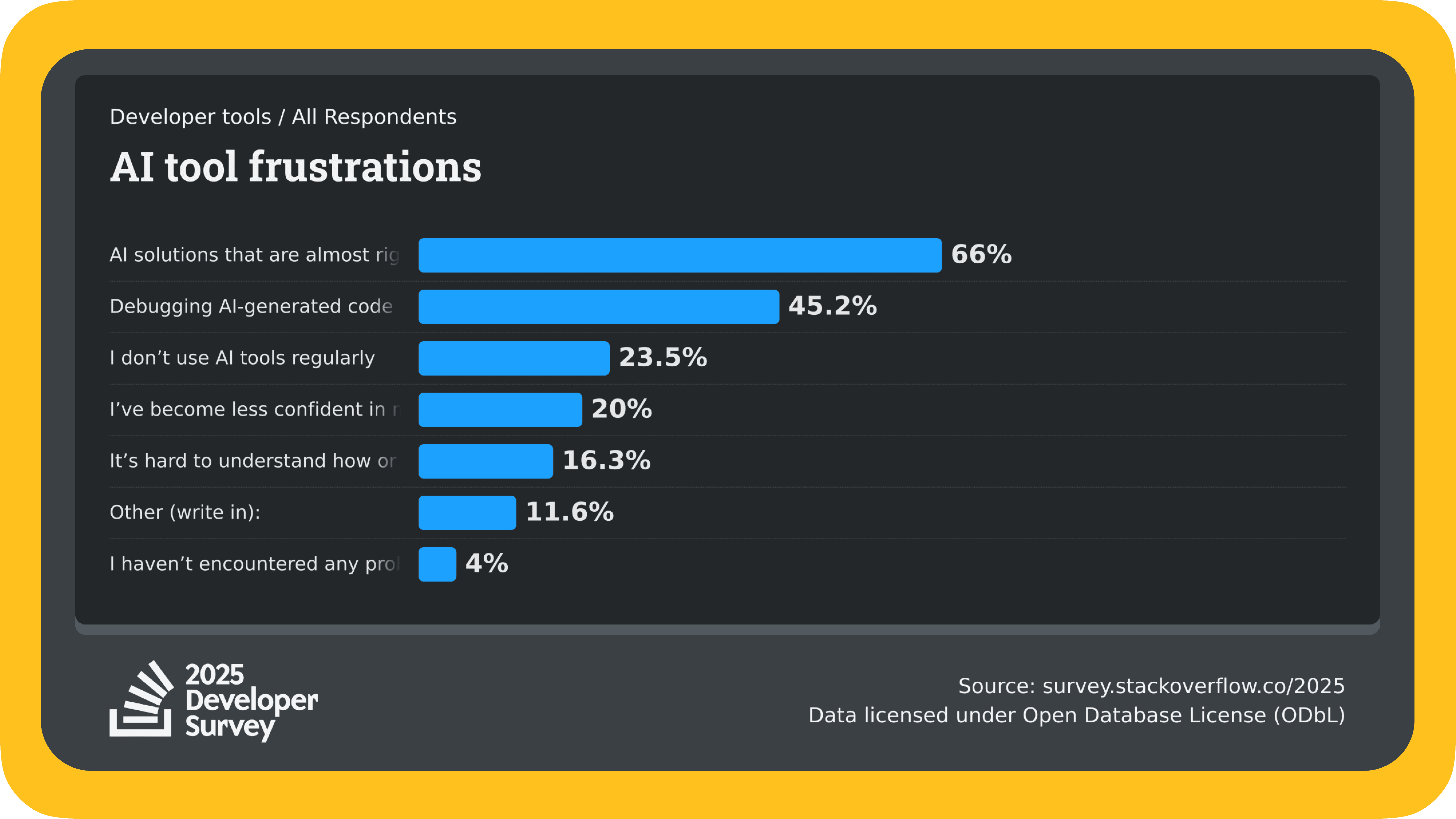

In the Stack Overflow Developer Survey of more than 90,000 developers, 66% said the most common frustration with AI assistants is that the code is “almost right, but not quite.” Another 45.2% pointed to time spent debugging AI-generated code.

That lines up with what most of us see in practice. As one of my teammates from the dev team mentioned in our discussion: “A lot of the positive sentiments you’d read about online are likely because the problems these people are solving are repetitive, boilerplate-y problems solved a gazillion times before. I don’t trust the code output from an agent... It’s dangerous and (in the rare case that the code is effective) an instant tech-debt factory. That said, I do sometimes find it a useful way of asking questions about a codebase, a powerful grokking tool if you will”. I can’t agree more.

So where is the magical 10x productivity boost?

The claim that AI makes developers 10x more productive gets repeated pretty often. But the math does not hold up. A 10x boost means what used to take three months now takes a week and a half. Anyone who has actually shipped complex software knows that it is impossible.

The bottlenecks are not typing speed. They are design reviews, PR queues, test failures, context switching, and waiting on deployments.

Let’s look at some interesting research. In 2023, GitHub and Microsoft ran a controlled experiment where developers were asked to implement a small HTTP server in JavaScript. Developers using Copilot finished the task 55.8% faster than the control group. The setup was closer to a benchmark exercise than day-to-day work, and most of the gains came from less experienced devs who leaned on the AI for scaffolding. And, obviously, those were vendor-run experiments.

METR tested the opposite scenario. Senior engineers worked in large OSS repositories that they already knew well. In that environment, the minutes saved on boilerplate were wiped out by time spent reviewing, fixing, or discarding AI output. As one of my teammates put it, you’re not actually saving time with AI coding; you’re just trading less typing for more time reading and untangling code.

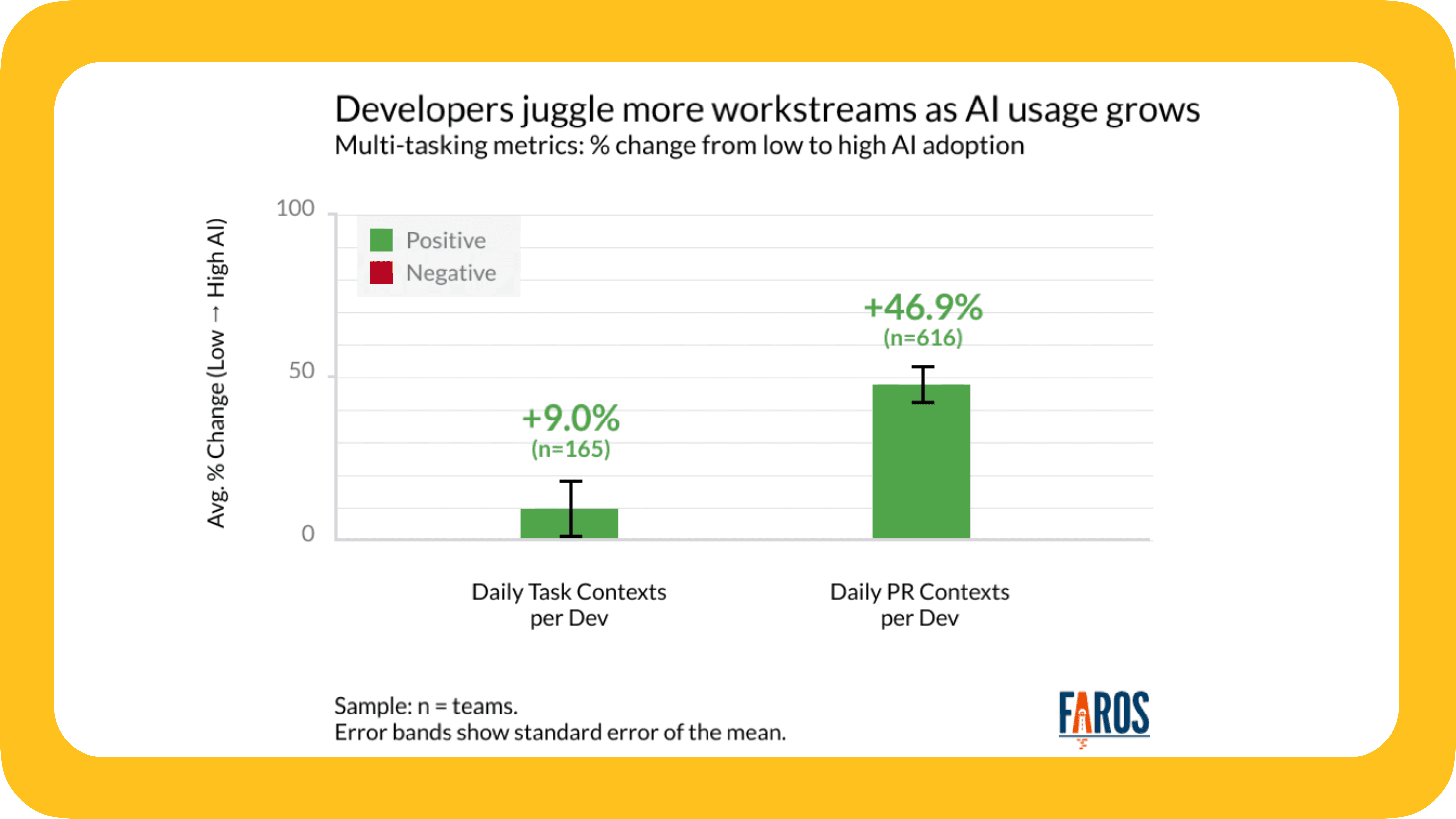

Even when AI enables parallelism, one more research shows the cost is more juggling, more reviews, not less time to ship. In July 2025, Faros AI analyzed telemetry from over 10,000 developers across 1,255 teams. They found that teams with high AI adoption interacted with 9% more tasks and 47% more pull requests per day. Developers were juggling more parallel workstreams because AI could scaffold multiple tasks at once.

Historically, context switching is a negative indicator, correlated with cognitive overload and reduced focus. Faros points out that developers spend more time orchestrating and validating AI contributions across streams. That extra juggling cancels out much of the speed-up you get in typing.

Not all findings are negative. In 2024, researchers from MIT, Harvard, and Microsoft ran large-scale field experiments across three companies: Microsoft, Accenture, and a Fortune 100 firm. The sample covered 4,867 professional developers working on production code.

With access to AI coding tools, developers completed 26.08% more tasks on average compared to the control group. Junior and newer hires adopted the tools more readily and showed the largest productivity boost:

- Senior developers, especially those already familiar with the codebase and stack, saw little or no measurable speed-up.

- The boost was strongest in situations where devs lacked prior context and used the AI to scaffold, fill in boilerplate, or cut down on docs lookups.

These gains 👆 were good but not near 10x.

Security is where the gap shows most clearly

With the core focus of our company on permission management for humans and machines, we naturally look at AI coding assistance through a security lens.

Older data from 2023 found that developers using assistants shipped more vulnerabilities because they trusted the output too much (Stanford research). Obviously, in 2025, fewer developers would trust AI-generated code.

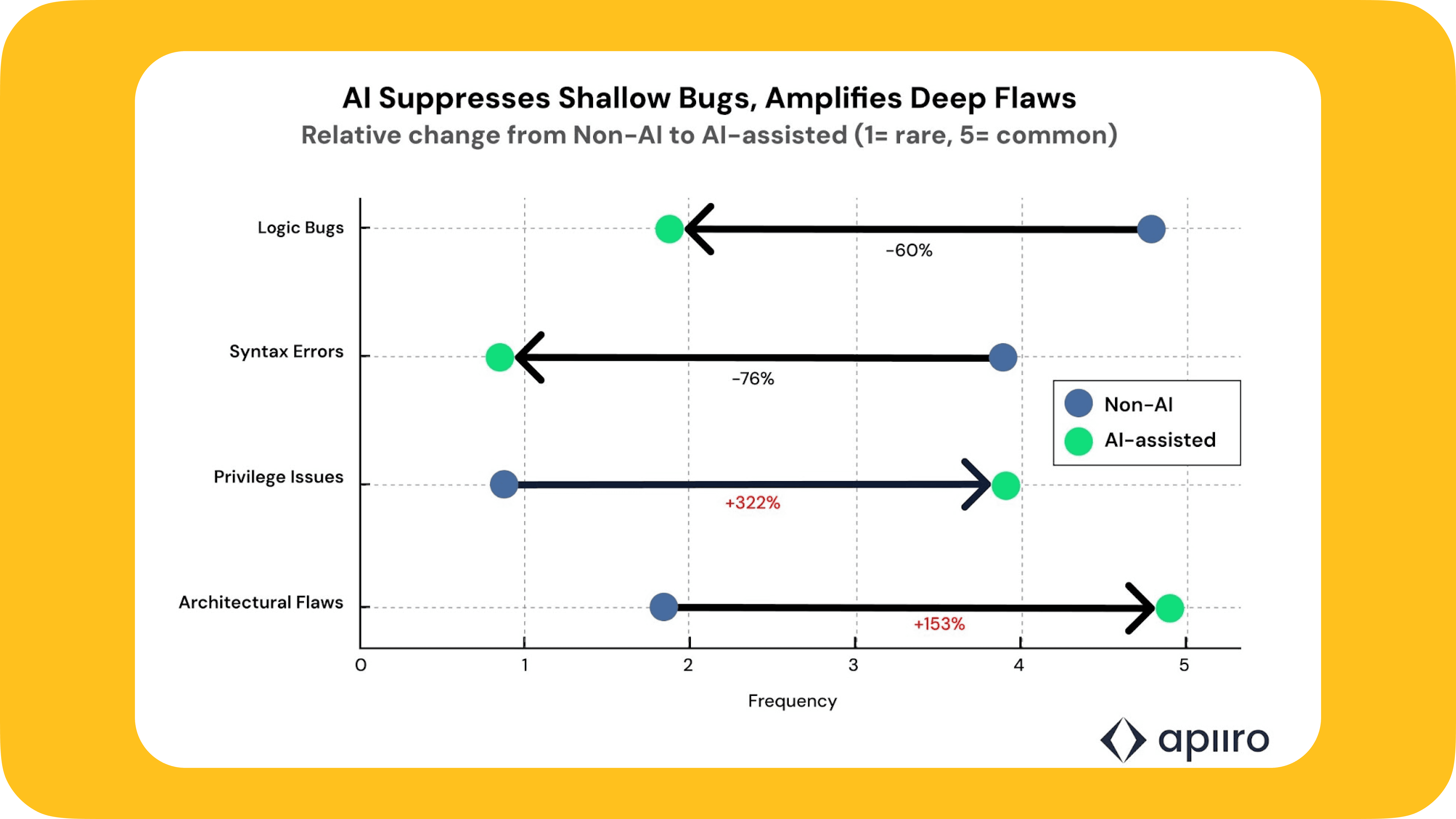

However, Apiiro’s 2024 research is actually very alarming. It showed AI-generated code introduced 322% more privilege escalation paths and 153% more design flaws compared to human-written code.

Apiiro’s 2024 research also found:

-

AI-assisted commits were merged into production 4x faster than regular commits, which meant insecure code bypassed normal review cycles.

-

Projects using assistants showed a 40% increase in secrets exposure, mostly hard-coded credentials and API keys generated in scaffolding code. Accidentally pasting API keys, tokens, or configs into an AI assistant is one of the top risks. Even if rotated later, those secrets are now in someone else’s logs.

-

AI-generated changes were linked to a 2.5x higher rate of critical vulnerabilities (CVSS 7.0+) flagged later in scans.

-

Review complexity went up significantly: PRs with AI code required 60% more reviewer comments on security issues.

And it is not just security; it is compliance too. If code, credentials, or production data leave your environment through an AI assistant, you cannot guarantee deletion or control over where that data ends up. For organizations under SOC2, ISO, GDPR, or HIPAA, that can mean stepping outside policy or outright violations. This is exactly the kind of blind spot CISOs worry about.

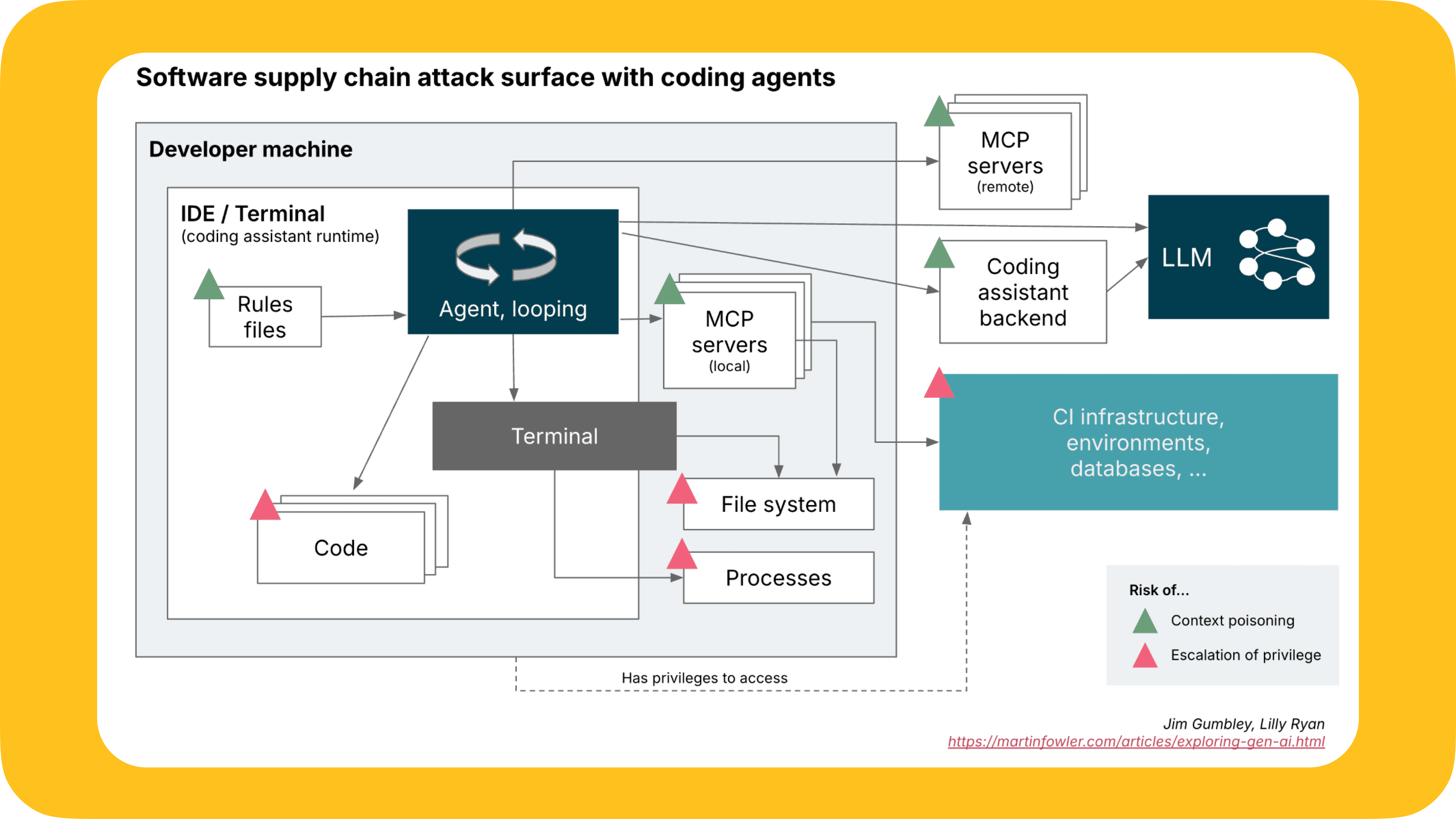

How AI assistants create new attack surfaces

AI coding assistants don’t just generate code. They also bring new runtimes, plugins, and extensions into the developer workflow. That extra surface means more places where things can go wrong and attackers have already started exploiting them:

-

In July 2025, Google’s Gemini CLI shipped with a bug that let attackers trigger arbitrary code execution on a dev machine. The tool that was supposed to speed up coding workflows basically turned into a local RCE vector.

-

A year earlier, the Amazon Q extension in VS Code (August 2024) carried a poisoned update. Hidden prompts in the release told the assistant to delete local files and even shut down AWS EC2 instances. Because the extension shipped with broad local and cloud permissions, the malicious instructions executed without barriers.

These incidents highlight specific failures, but the bigger issue is structural. Coding assistants expand the software supply chain and increase the number of privileged connections that can be abused.

The diagram below, from Jim Gumbley and Lilly Ryan’s piece on Martin Fowler, maps this new attack surface. It shows how agents, MCP servers, file systems, CI/CD, and LLM backends are all interconnected, each link a potential entry point for context poisoning or privilege escalation.

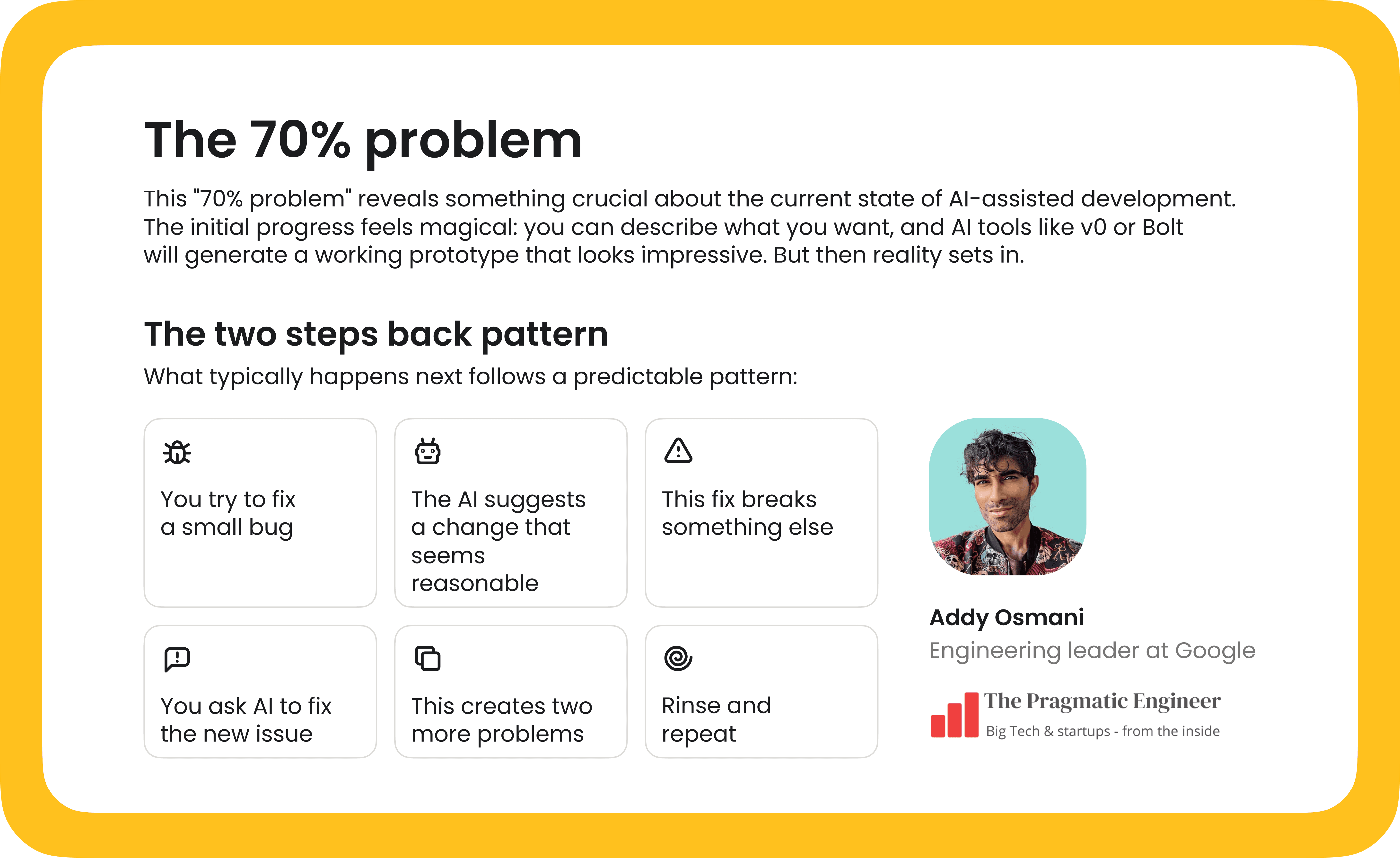

The 70% problem

I loved Addy Osmani’s piece in Pragmatic Engineer because it nailed what many developers see. AI can get you 70% of the way, but the last 30% is the hard part. The assistant scaffolds a feature, but production readiness means edge cases, architecture fixes, tests, and cleanup:

For juniors, 70% feel magical. For seniors, the last 30% is often slower than writing it clean from the start. That is why METR’s experienced developers were slower with AI; they already knew the solution, and the assistant just added friction.

This is the difference between a demo and production. AI closes the demo gap quickly, but shipping to production still belongs to humans. A demo only has to run once. Production code has to run a million times without breaking. Humans at least know what they want, even if they misunderstand requirements. An LLM has no intent, which is why the final 30% always falls apart.

Our team also flagged another issue. Patterns you learn while vibe coding with these tools often break with every model update. There is no stable base to build on. Engineering needs determinism, not shifting patterns that collapse the moment the model retrain.

Business opinion vs. developer opinion

The story of the “10x engineer” has always been more appealing in boardrooms than in code reviews. Under pressure to do more with less, it is tempting for leadership to see AI as a multiplier that could let one team ship the work of ten. The current AI hype plays straight into that narrative.

Developers, though, know where the real bottlenecks are. No AI collapses design discussions, sprint planning, meetings, or QA cycles. It does not erase tech debt or magically handle system dependencies. The reality is incremental speed-ups in boilerplate, not 10x multipliers across the delivery pipeline.

Engineers and EMs both need the same thing — software that is secure, reliable, and production-ready. AI can play a role in getting there, but only when expectations are grounded in how development actually works.

Shameless plug: If you are working on IAM and permission management, our product Cerbos Hub handles fine-grained authorization for humans and machines. Enforce contextual and continuous access control across apps, APIs, services, workloads, MCP servers, and AI agents — all from one place.

Book a free Policy Workshop to discuss your requirements and get your first policy written by the Cerbos team

Recommended content

Mapping business requirements to authorization policy

eBook: Zero Trust for AI, securing MCP servers

Experiment, learn, and prototype with Cerbos Playground

eBook: How to adopt externalized authorization

Framework for evaluating authorization providers and solutions

Staying compliant – What you need to know

Subscribe to our newsletter

Join thousands of developers | Features and updates | 1x per month | No spam, just goodies.

Authorization for AI systems

By industry

By business requirement

Useful links

What is Cerbos?

Cerbos is an end-to-end enterprise authorization software for Zero Trust environments and AI-powered systems. It enforces fine-grained, contextual, and continuous authorization across apps, APIs, AI agents, MCP servers, services, and workloads.

Cerbos consists of an open-source Policy Decision Point, Enforcement Point integrations, and a centrally managed Policy Administration Plane (Cerbos Hub) that coordinates unified policy-based authorization across your architecture. Enforce least privilege & maintain full visibility into access decisions with Cerbos authorization.