MCP security & AI agent authorization. A CISO and architect’s guide to securing the new AI perimeter.

An AI-powered customer service bot, acting on behalf of a logged-in user, is asked a seemingly simple question: “Can you summarize my recent support tickets and check the status of my latest order?” The bot complies. It has verified the user’s identity and is armed with a service account that grants it broad access across backend systems. It queries the support ticket database and the order database, aggregates the results, and returns an answer.

But something is wrong. Due to a subtle flaw in how it handles session data, or perhaps through a cleverly crafted prompt, the bot pulls in information from another user’s account. Suddenly, our user is viewing data they should never have seen: the name, address, and order details of a complete stranger.

There was no breach of authentication. No credentials were stolen. The system simply did what it was asked to do by a user who was legitimately inside the perimeter.

This is the new class of risk that keeps security leaders and system architects awake at night. In our rush to harness the immense power of AI, we have collectively introduced a new class of vulnerability. We have created a novel and poorly understood architectural component, the Model Context Protocol server, and granted it the keys to our kingdom. We have authenticated the AI agent, but we have failed to properly authorize its actions. The result is a security blind spot big enough to drive a truck through.

This article provides a strategic overview for CISOs and a technical blueprint for architects on how to address this critical vulnerability. It is a guide to moving beyond outdated security models and implementing a modern, fine grained authorization architecture that enables you to innovate with AI, safely and confidently.

MCP security risks. The architecture of a modern data leak

To a CISO, the MCP server represents the single largest expansion of the attack surface in the last decade. To an architect, it is a new, high privilege component that has become a critical point of failure.

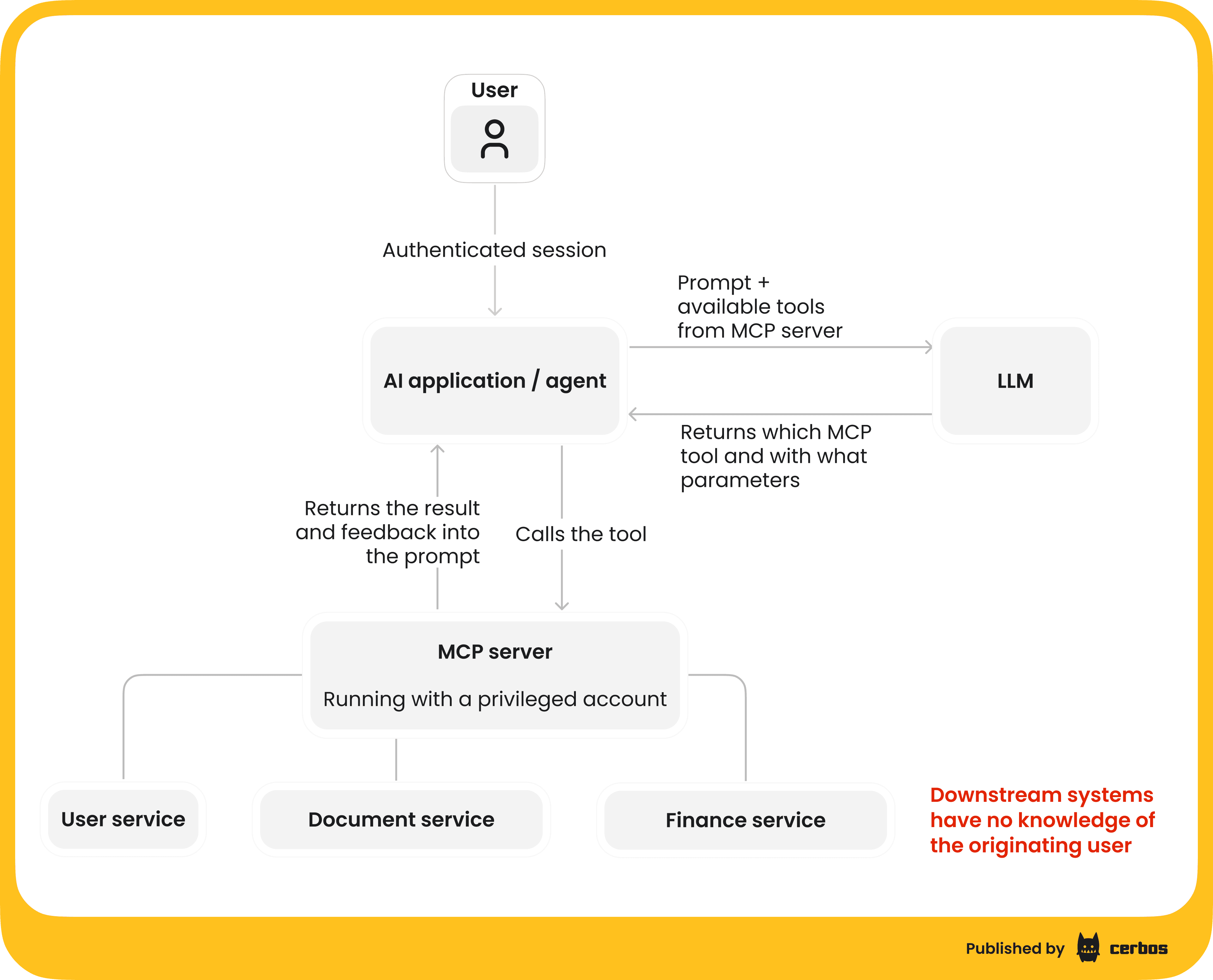

The MCP server is a layer exposing tools and data to an LLM, often internal corporate data, for AI applications to call upon as and when they decide it is needed. It doesn’t just pass along requests like a traditional API gateway, it acts blindly as directed by an LLM, which has decided to fetch some data or run some action. When these MCP servers use their own powerful credentials to roam across your backend systems, they are fetching and aggregating data from dozens of sources to fulfill that request, without necessarily respecting the originating user's permissions..

It is also now the most privileged actor in your architecture. Understanding the scope of this power, and the dangers it introduces, is the first step toward securing it.

This architecture creates two immediate and severe problems.

The broken chain of identity and the "confused deputy"

First, in many AI designs, the MCP server operates using its own credentials. Often a single, all-powerful service account. When the AI agent, via the MCP, calls an internal API or database, the identity of the original user is lost. The downstream services see a request coming from a highly privileged internal service, not from User Alice or Bob. This breaks the chain of identity that traditional end-to-end security models rely on.

Any fine grained access controls you had at the service level are effectively bypassed. Your security posture is now entirely dependent on the MCP server itself making the correct authorization decisions for every request, a risky proposition, to say the least. If that server is tricked or fails to enforce policy on one request, there’s nothing downstream to stop a data leak. In essence, the MCP server becomes a single point of security failure in your data access layer.

Second, consider a more modern pattern where the MCP server tries to act on behalf of the user by carrying the user’s token or permissions. On paper, this preserves the user’s identity through the chain. The MCP “pretends” to be the user when fetching data. But even here, we encounter a critical problem: the MCP server is now an over-privileged proxy. When a user authenticates, the MCP often inherits a token or session with that user’s entire permission set. The AI agent now wields all of the user’s access rights, potentially across many systems, and it may wield them in unintended ways.

This leads directly to the classic “confused deputy” problem, amplified by the unpredictability of AI. The MCP server is the deputy, a trusted entity that can be confused (misled by a malicious or accidental prompt) into misusing its authority.

For example, if a user connects an AI assistant to their suite of cloud apps (email, calendar, drive, etc.), they might inadvertently grant it broad permissions across all those services. The user asks the AI to organize their email, but behind the scenes, a malicious MCP server might also quietly pull data from their calendar or shared drive. The principle of least privilege is immediately violated. The MCP isn’t confused about who the user is, it knows exactly which user account it’s acting for, but it can be easily tricked into exceeding what that user should be allowed to do in context. A cleverly crafted prompt can entice this highly privileged deputy to retrieve and reveal data well outside the user’s true scope. In our earlier support bot scenario, even if the MCP server was correctly impersonating User A, a simple bug or prompt misuse led it to grab User B’s records, something User A had no authorization to see. A secure authentication quickly turned into a conduit for a major data leak.

- For the CISO. This is a governance and compliance nightmare. You have a component that bypasses your established data access controls, creating an unauditable "super user" that can be manipulated by any authenticated user. This model makes it impossible to enforce the Principle of Least Privilege and opens the door to significant data privacy violations.

- For the Architect. This is a critical design flaw. The user-to-system-to-system identity flow breaks your end-to-end security model. You are placing absolute trust in a single, complex component to correctly self-regulate its access to every piece of data in your organization, a strategy that is architecturally unsound and destined to fail.

Why your current security model is broken

When faced with this challenge, the default response is to reach for familiar security tools. However, the standard approaches to authorization are not just inadequate for AI; they actively contribute to a weaker security posture.

Two common patterns, Role-Based Access Control and hard-coded authorization, are proving inadequate for AI-driven systems.

The anti-pattern of role-based access control (RBAC)

RBAC is the first tool most teams grab, but it fails spectacularly in this new context. Its static, context-blind nature is a fundamental mismatch for the dynamic needs of AI. Roles are coarse grained and context-blind: they encode that “User X is an HR manager,” but not which employees’ records that manager should access, or under what conditions. AI agents don’t operate on static, pre-defined pathways, they generate on-the-fly queries and use cases, combining data in ways we didn’t explicitly anticipate.

This leads to "role explosion," where an unmanageable number of highly specific roles are created to handle every type of permission, creating a system that is impossible to reason about. Before long, nobody on the team can even remember what each role really means.

Worse, RBAC cannot handle relationships or context. A “Manager” role cannot answer, “Is this manager allowed to view this particular employee’s performance review?”. The role might grant general access to reviews, but it doesn’t encode relationships like who reports to whom. It is a blunt, global permission in a world that requires sharp, contextual precision.

In AI-driven workflows, the shortcomings of RBAC are magnified. Imagine trying to create roles for every combination of data and tool an AI agent might need (“support_agent_with_order_access” or “sales_bot_with_customer_data_read”). The number of roles needed becomes unmanageable, and still you would miss the real deciding factors like data ownership or time based access. RBAC simply lacks the expressiveness to keep up with AI’s flexibility.

Inevitably, teams either over-provision roles, granting far more access than necessary, or constantly struggle to tweak and add roles for every new situation. Often both.

- For the CISO. An RBAC model for your AI applications will inevitably lead to overly permissive roles. To make the system work, you will be forced to grant broad access, which will result in security gaps and an inability to provide auditors with evidence of fine grained control.

- For the Architect. RBAC is a source of immense technical debt. It forces you into a corner where you must choose between creating hundreds of brittle roles or writing complex, hard to maintain logic to work around the model's limitations. Either way, you’re contorting your architecture around the limitations of a simplistic access model. It’s a recipe for complexity and bugs.

The trap of hard coded authorization logic

When RBAC inevitably fails, developers often resort to writing authorization logic directly into the application code - the MCP server implementation in this case - with if/else statements, to handle the nuance that roles can’t capture. This approach may seem to work at first, but it is an architectural trap that creates a distributed, inconsistent, and unauditable security model.

The rules that govern who can access what become scattered across dozens of microservices. There is no single source of truth for your security policy (“who can do what”). As a result, every permission change requires a full development and deployment cycle, turning your security posture into a bottleneck for business agility.

Something as simple as “Users in Europe now require an extra clearance to access Feature X” means hunting through code to find every place where Feature X is gated, updating conditions, and redeploying services.

Meanwhile, the lack of centralized oversight means you can’t easily verify that all those scattered checks actually enforce a coherent policy. If asked, “Show me that only managers can approve expense reports, and only for their own team,” you’d have to search through logs and code in multiple services. Hardly a sustainable compliance practice.

- For the CISO. Hard coded logic means your security policy is invisible. It is hidden in code that only developers can read, making it impossible to audit effectively. There is no guarantee of consistent enforcement, and demonstrating compliance becomes a painful, manual process of code review.

- For the Architect. This is a maintainability nightmare. It tightly couples your security logic to your business logic, making both more difficult to change. You create a brittle, distributed system that is impossible to reason about and guaranteed to contain inconsistencies and security holes.

If you’re looking to safely expose tools to agents without compromising control, reliability, or auditability - read our technical guide, try out Cerbos Hub, or read our ebook "Zero Trust for AI: Securing MCP Servers".

A modern blueprint for AI authorization

The solution is not to write better code inside your application. The solution is to remove the authorization logic out of your application and MCP servers entirely. By adopting an externalized authorization architecture, you regain control and consistency. You get a system that is secure, scalable, and agile.

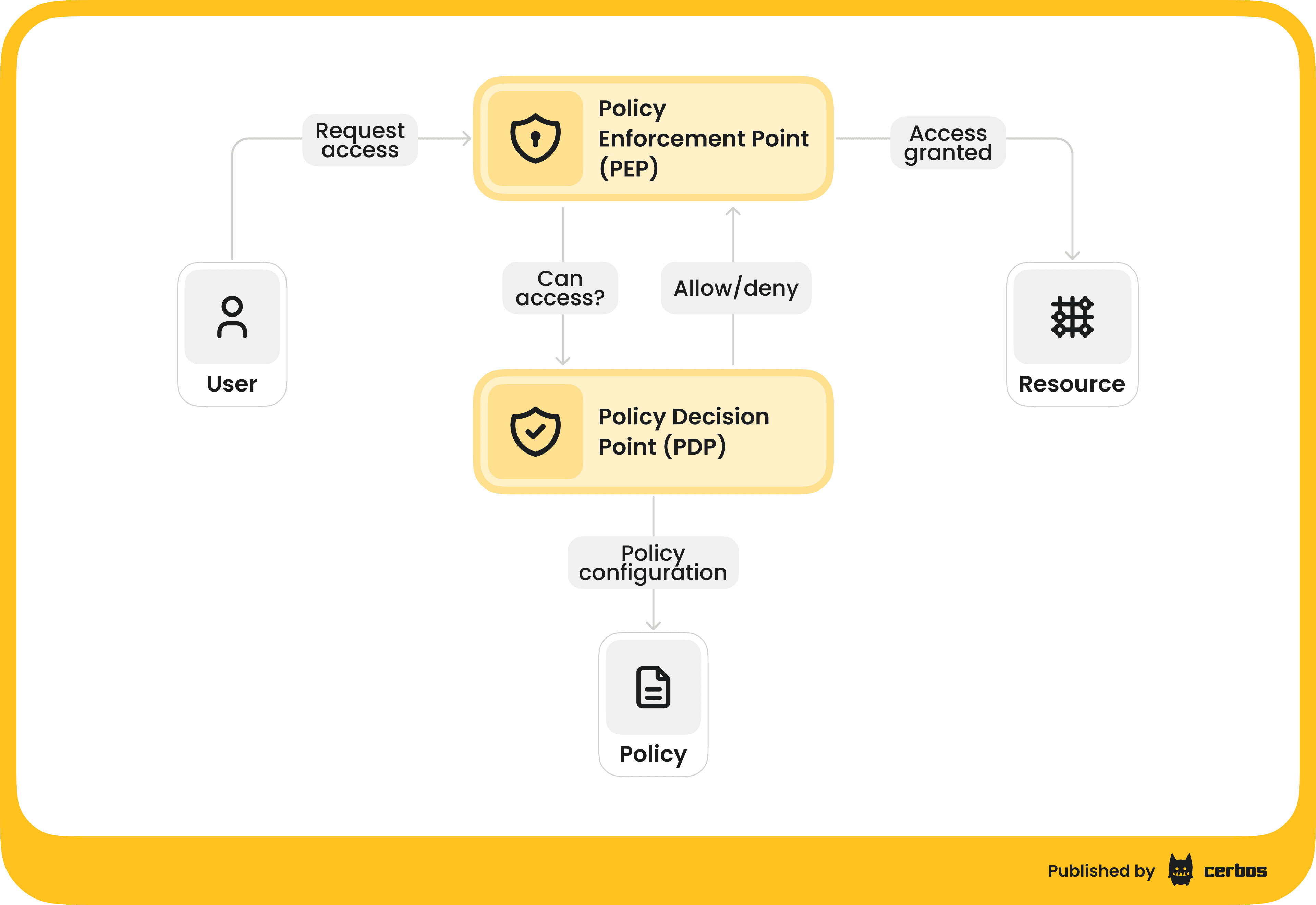

This authorization pattern is composed of two parts: the Policy Enforcement Point and the Policy Decision Point.

Think of a high-security office building: the turnstile at the entrance is the enforcement point (PEP). Its job isn’t to decide who gets in; it’s simply to stop everyone and ask for credentials. The actual decision is made by a central brain, the access control server, which is the PDP. The turnstile (PEP) sends the badge information to the server, the server checks the rules and responds “unlock” or “deny,” and the turnstile acts accordingly. The turnstile itself stays simple and does the same thing for every person, every time.

We want to replicate this pattern in our AI systems. In practice, that means introducing a lightweight, consistent authorization check at every critical trust boundary, from the AI agent or application, through the MCP server, and down to each backend service it calls. Each of these components will act as a PEP: when a request comes in, the component doesn’t autonomously decide what to allow; instead, it formulates an authorization query and sends it to a centralized PDP service. The query might be as simple as: “User 123 is attempting Action X on Resource Y, is this allowed right now?” The PDP, which is a dedicated authorization engine, evaluates this against a unified set of policies and responds with “Allow” or “Deny.”

Crucially, the verification doesn't happen only once at the front door. It happens every step of the way.

Suppose our AI agent (the front-end interface) receives a prompt from a user. It checks with the PDP whether that user can make that kind of request. Next, the agent invokes the MCP server to perform some tool actions; the MCP server, before executing, also checks with the PDP to ensure the user is allowed to use that tool on the requested data. Then, when the MCP needs to fetch data from, say, the finance microservice, that microservice in turn does its own check with the PDP to verify the request. Each service in the chain independently enforces the same centralized policies.

This creates a chain of verification. Even though the MCP server has broad access capabilities, it no longer operates on blind trust. At each step it is effectively asking, “Should I let this action proceed for this user?” and getting an authoritative answer. No single component, not even the MCP, is implicitly trusted with unchecked access to data. The “deputy” (MCP) is no longer left to its own devices; it’s continually supervised by the policy brain. The end result is that fine grained authorization rules are consistently applied throughout the entire flow, dramatically reducing the chance of a confused deputy causing havoc.

- For the CISO. This model gives you a centralized point of control and visibility for all authorization. You now have a single source of truth for your access policies, making governance and auditing straightforward. Every access decision can be logged in a structured format, providing a complete, consistent audit trail.

- For the Architect. This is a clean, decoupled architecture. You remove complex, stateful logic from your application code, making your services simpler and more maintainable. You create a clear separation of concerns, allowing your security model to evolve independently of your application's deployment lifecycle. This pattern is language agnostic and fits seamlessly into any microservices environment. It future-proofs your system; you can adopt new AI tools or services without rewriting authorization logic each time, as long as each new component can call the central PDP.

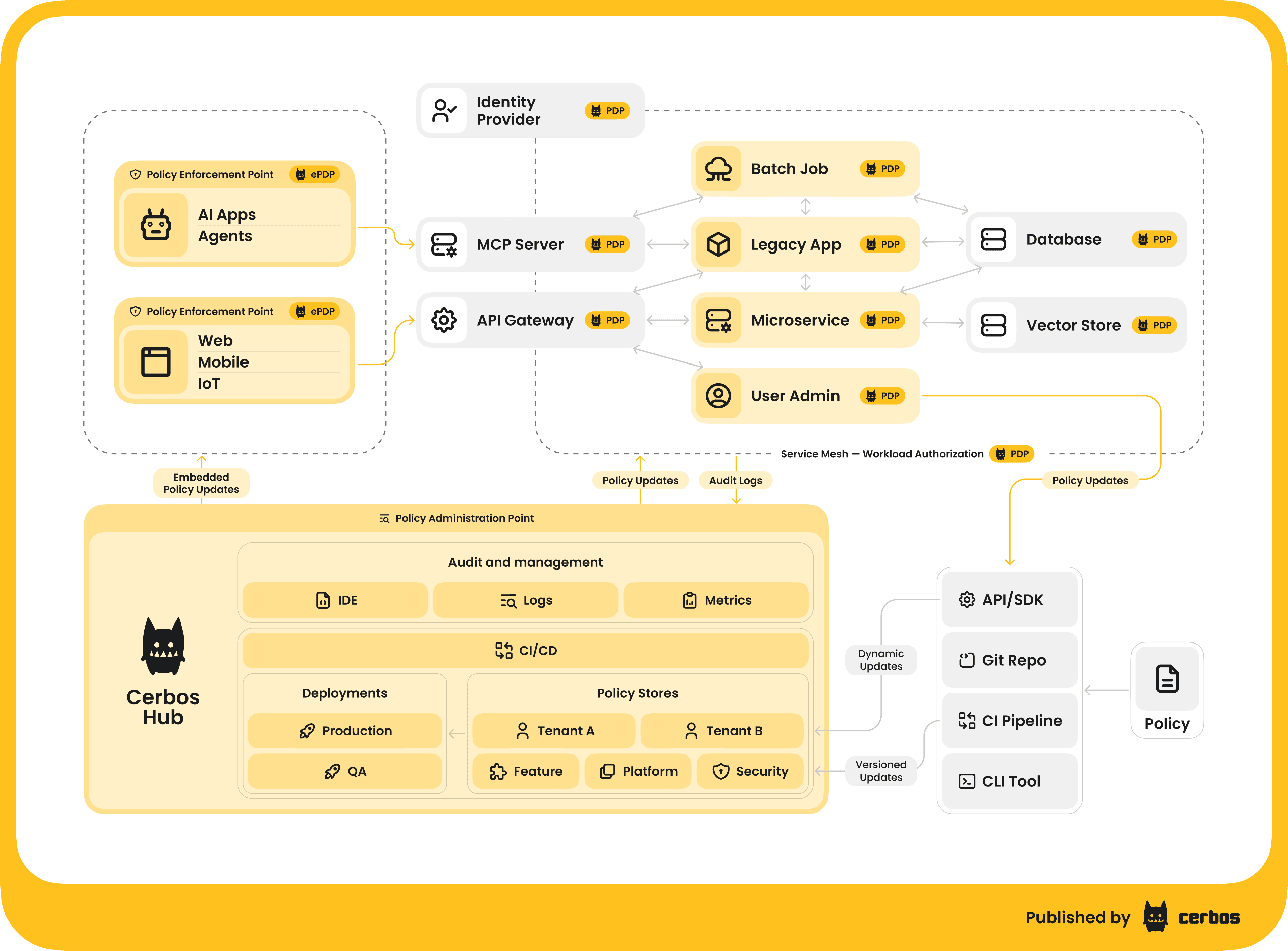

Implementing the blueprint with Cerbos

How do we put this blueprint into practice? One way is by using Cerbos, a high-performance externalized authorization solution purpose-built to serve as the Policy Decision Point in modern architectures. It provides the concrete implementation that turns this architectural vision into a production ready reality.

It allows you to define your access policies in a concise, human-readable way and then evaluates those policies on every request, in real time, without adding noticeable latency.

Performance and scalability

The primary concern for any architect is latency. Cerbos is designed for speed. Written in Go, it makes decisions in under a millisecond. When deployed as a sidecar alongside your MCP server, the total latency for an authorization check is negligible, making real time, per resource checks feasible even for the most demanding AI workloads. In other words, your AI agent won’t even notice it. Its stateless design means it scales horizontally by default, ensuring it can handle any load you throw at it.

Operational excellence

For CISOs and architects, a tool is only as good as its operational maturity. Cerbos is a lightweight, single binary that can be deployed anywhere, from a developer's laptop to a massive Kubernetes cluster. It comes with a full suite of tools for local development, unit testing, and CI/CD integration.

For organizations that prefer a managed approach, Cerbos Hub can be used. It is a managed control plane that automates the policy deployment workflow. This gives you a centralized, auditable way to manage policy versioning, reviews, and rollouts.

A trusted foundation for innovation

The security challenges introduced by AI are real, but they are solvable. The path forward is not to slow down innovation, but to build a better, more secure foundation for it. By moving away from the brittle, opaque security models of the past and adopting a decoupled authorization architecture with a dedicated engine like Cerbos, you are not just mitigating risk. You are building a strategic capability.

You are equipping your organization with an access control system that can keep pace with the creativity and complexity that AI unleashes. In effect, you turn security from a blocker into an enabler for your AI initiatives.

- For the CISO. You gain centralized governance, complete auditability, and the ability to enforce true, fine grained, least privilege access for your AI agents. You transform security from a blocker into a confident enabler of your company's AI strategy.

- For the Architect. You eliminate a massive source of technical debt and create a cleaner, more scalable, and more maintainable system. You provide your developers with a clear, consistent, and powerful way to handle authorization, which boosts their velocity. They can focus on building innovative features rather than writing boilerplate permission checks. You can integrate new tools, services, or data sources into your AI stack without worrying that you’ll have to untangle and rewrite a web of permission logic.

Conclusion

The question is no longer if you will face an authorization related data leak from your AI systems, but when. Every organization implementing AI agents and features is, sooner or later, going to stumble into this issue if they don’t address it head-on. The time to act is now. By implementing a modern, externalized authorization architecture, you can build AI applications that are not just powerful, but also safe, compliant, and worthy of your customers' trust.

If you’re keen to learn more or to see a concrete example, read our ebook “Zero Trust for AI: Securing MCP Servers”. If you are interested in implementing authorization for MCP servers - try out Cerbos Hub or book a call with a Cerbos engineer to see how our solution can help you safely expose tools to agents without compromising control, reliability, or auditability.

FAQ

Book a free Policy Workshop to discuss your requirements and get your first policy written by the Cerbos team

Recommended content

Mapping business requirements to authorization policy

eBook: Zero Trust for AI, securing MCP servers

Experiment, learn, and prototype with Cerbos Playground

eBook: How to adopt externalized authorization

Framework for evaluating authorization providers and solutions

Staying compliant – What you need to know

Subscribe to our newsletter

Join thousands of developers | Features and updates | 1x per month | No spam, just goodies.

Authorization for AI systems

By industry

By business requirement

Useful links

What is Cerbos?

Cerbos is an end-to-end enterprise authorization software for Zero Trust environments and AI-powered systems. It enforces fine-grained, contextual, and continuous authorization across apps, APIs, AI agents, MCP servers, services, and workloads.

Cerbos consists of an open-source Policy Decision Point, Enforcement Point integrations, and a centrally managed Policy Administration Plane (Cerbos Hub) that coordinates unified policy-based authorization across your architecture. Enforce least privilege & maintain full visibility into access decisions with Cerbos authorization.